In 2009 Apple released a new task parallelism technology called

Grand Central Dispatch (GCD). Apple worked hard on tuning GCD; stated that only 15

instructions are required to queue up a work unit in GCD, while creating a

traditional thread could easily require several hundred instructions.

The main advantage of GCD is that programmer/user does not required to choose

how many thread for application that optimize the most. It can save a lot of

time for programmers because getting the most power out of CPUs requires a lot

of measurement. With GCD, let the operating system decide it for you, it just

works.

Most GCD documentation provided by Apple take focus on user applications: background process, asynchronous callbacks, non-blocking UI, dispatched IOs …etc. GCD and c/obj-c blocks works pretty good in those scenarios, but we want a more general comparison between GCD and traditional thread models. Which is faster? Currently no one has made a general benchmark for this. I targeted to use an industry standard benchmark for GCD vs threads, and I ended up by picking Conjugate Gradient computation in NAS Parallel Benchmark (NPB) maintained by NASA as my benchmark model.

I uses OpenMP implementation in CG problem. It is an shared memory threading API which is much easier to use then POSIX thread. However it is still required for programmer/user to pick thread number in run time or in compile time. NASA only provide fortran code, so I uses Ohio’s C implementation.

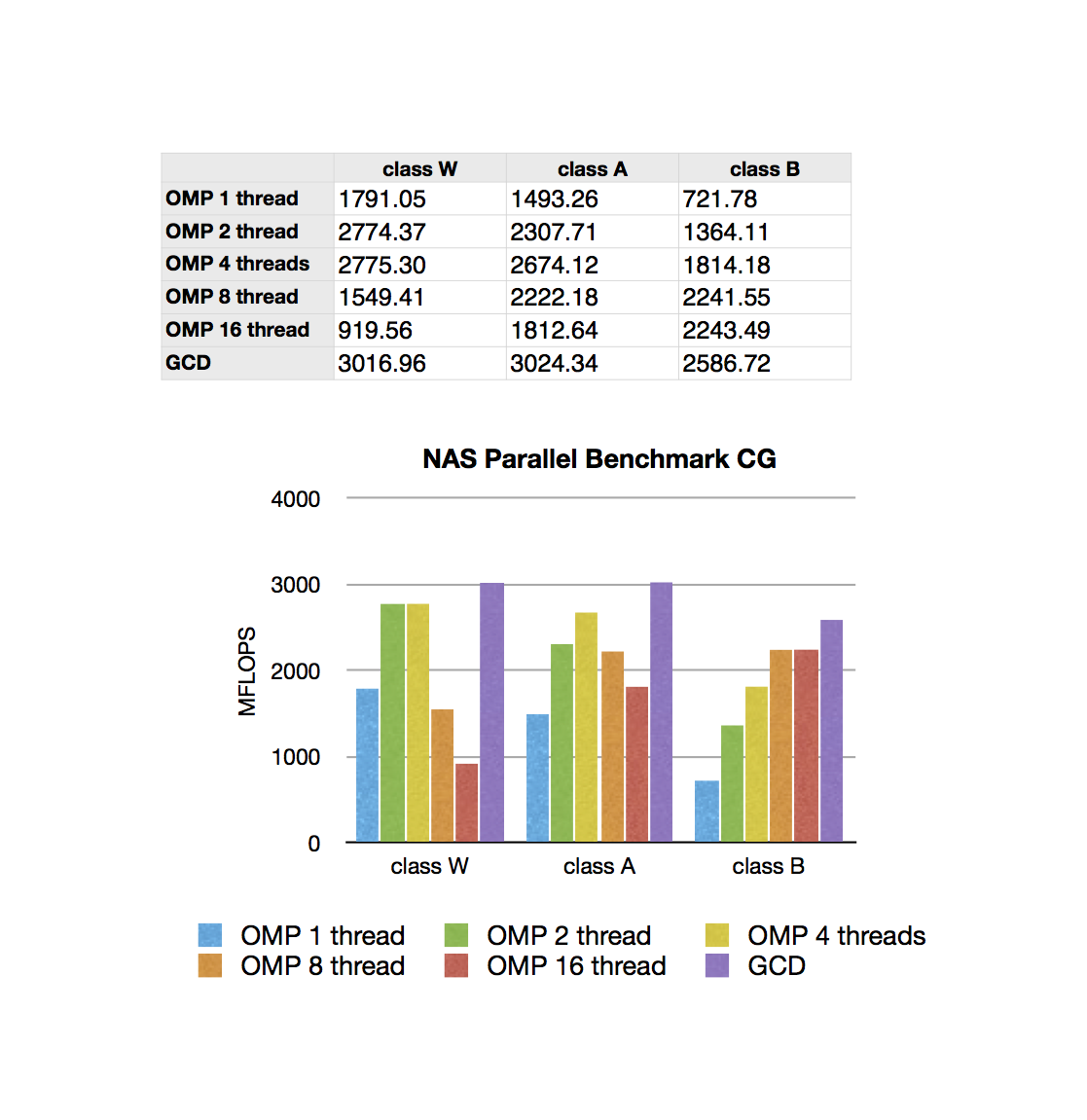

Benchmark result

The result is quite promising! Problem sizes in NPB are predefined and indicated as different classes:

- Class W: vector size: 7000, iterations: 15 (90’s workstation size, now likely too small)

- Class A: vector size: 14000, iterations: 15

- Class B: vector size: 75000, iterations: 75

I tested OpenMP with different thread numbers and it performs differently on different problem size. It not quite obvious to choose a correct thread number for the problem, and GCD implementations beats them all.

Bottleneck implementation

The OpenMP implementation looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

Other code instead of bottleneck are basically vector initialization, copy, multiply and norm computations. I tested all of these, but they don’t make big differences between OpenMP, GCD, and BLAS1 functions.

GCD implementation looks much like the original code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

What a great news! It is much easier then I thought to transfer the original code into GCD.

Parallel reduction in OpenMP, GCD, and BLAS

As I concluded before, it doesn’t make big difference between three of these. The implementations are:

1 2 3 4 5 6 7 8 9 | |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

1 2 3 4 5 6 7 | |

I think it does not make difference because those operations are all one dimensional BLAS1 problems.

Note on cache line size

I thought that cache line size matters when I start implementing GCD version of

parallel reduction. But it ended up that you just need to give it a large enough

size for compiler to generate SIMD optimization. Note that you can get the CPU

cache line size by command sysctl -n hw.cachelinesize from shell.

Conclusion

I think the best practice so far is to use BLAS whenever you can. It’s cleaner and highly optimized by libraries developed by Apple, Intel, or other HPC foundations. For other operation that BLAS don’t support, GCD is a good choice and easy to migrate to. The pros and cons go as follows:

OpenMP over GCD

- Supported by gcc, while clang doesn’t.

- Can be used on C, CPP, and Fortran (and many more?)

- Many existing numerical code uses OpenMP

- Easier to get start with. eg.

#omp parallel for - Better syntax in reduction:

#omp reduction (+:sum)

GCD over OpenMP

- Much easier to tune performance.

- Tighter logic construction. Everything is encapsulated in blocks.

- No separated thread spawns and private variables like OpenMP.

- Much less parameters to adjust in compile time and run time.

- Highly optimized in any kinks of problem sizes.

- Works on iOS (OpenMP does not)

I think the greatest advantage you can gain from GCD is that it is highly optimized on different problem sizes, because operating system takeovers task load balancing. It surprised me that on class W problem, OpenMP version with 16 threads is twice as slow compares to 1 thread version. With GCD, you no longer need to handle this unexpected result! Cheers.